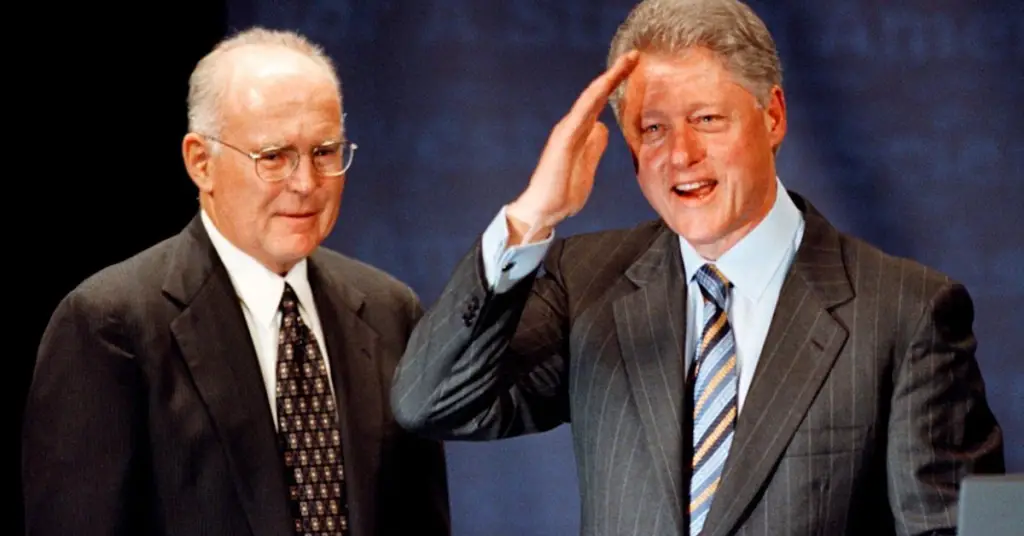

The “iron rule” that once kept the U.S. ahead of China in technological progress has just come to an end, and the consequences are huge. This rule, known as “Moore’s law,” wasn’t a law of physics, but an observation made by Intel co-founder Gordon Moore. He noted that the power of computers doubled every two years, leading to exponential growth in technology. This rapid growth transformed industries and society, allowing us to build technological wonders that were once thought impossible.

Robert X Cringely, a well-known tech columnist, once described Moore’s law as the creator of immense wealth, a force that shaped social values, and a predictor of where our culture was headed. Now, however, that belief in constant growth is about to face a reality check. As technology reaches the limits of what’s physically possible, we will no longer enjoy the luxury of infinite growth. The future will force us to get creative and adjust to the limits of what’s achievable.

Moore’s law was like a gift to the tech industry. It didn’t just make computers more powerful—it allowed new products to emerge, as next year’s chips could be smaller and more powerful. This led to advances in everything from noise-canceling earbuds to massive data centers, making processes more efficient and improving GDP.

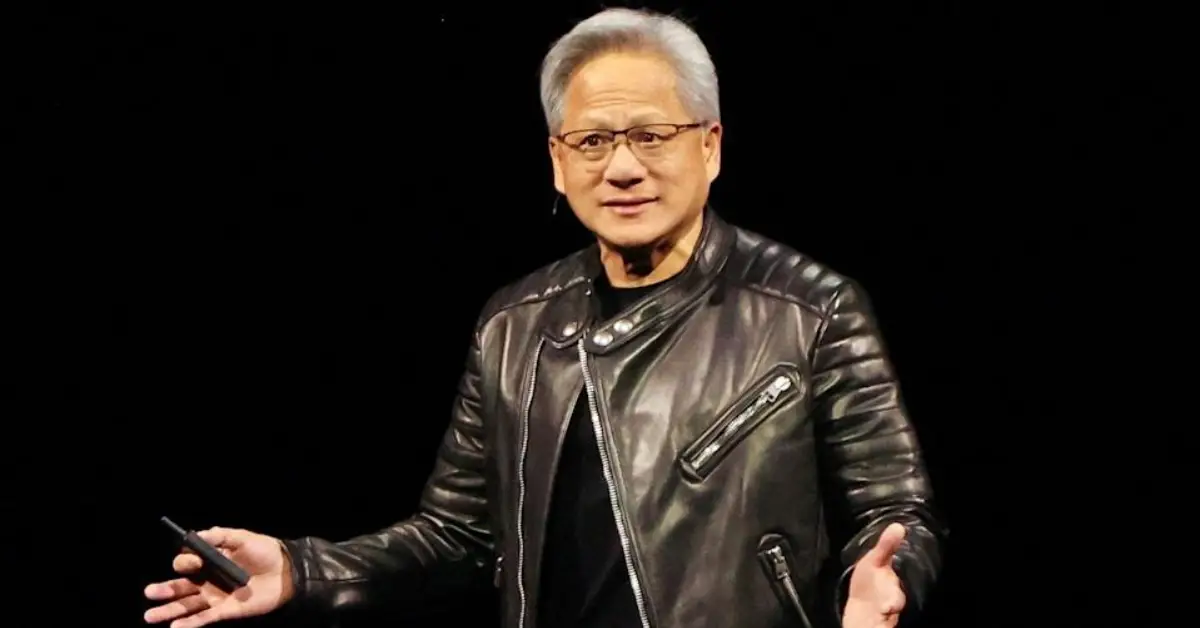

But cracks in Moore’s law began to appear around the turn of the century. Gordon Moore himself predicted in 2015 that the law would come to an end within a decade. Now, as we look at recent developments in technology, we see that Moore’s law is no longer relevant. Nvidia’s chief executive, Jensen Huang, confirmed that Moore’s law is “dead and buried” in 2022, and the latest developments in processor technology support this conclusion.

What killed Moore’s law? The laws of physics, specifically the limits of how small and dense transistors can be packed into a microchip. Today, the chips in devices like the iPhone have 19 billion transistors packed into a space the size of a fingernail. The transistors are now so close together that they’re narrower than a strand of human DNA.

Though there are still some tricks the chip industry can use to stretch Moore’s law a little longer, like 3D stacking and chiplets, the era of explosive growth in computing power is over. The future will see less widespread access to powerful chips, and they won’t be as cheap or easy to obtain.

So, what happens now? The answer is simple: we have to get smarter. For years, Moore’s law allowed software to compensate for hardware limitations. However, with that advantage fading, software needs to become more efficient. The sloppy coding that became common in the age of abundance is no longer acceptable. For example, Microsoft’s Windows 11 drains battery life on the same hardware that a free Linux alternative runs much more efficiently on.

In a post-Moore world, engineers will need to be more careful and innovative, focusing on optimizing software rather than relying on endless hardware improvements. One solution might be better software design, as suggested by top computer scientists like Butler Lampson, one of the architects of modern computing. We can no longer afford to be lazy with our code.

Artificial intelligence (AI) has been living in a world of its own, relying on specialized, expensive chips to run large language models. But even within the AI boom, we can learn some important lessons. When an unknown team in China released their large language model, DeepSeek, the West was shocked.

Despite lacking the resources of Western companies, the Chinese team made smart decisions in software design that reduced the hardware costs of running the model. Their approach was based on principles that a first-year computer science student would understand, but it showed that smart software design can make a huge difference.

The focus in AI development is no longer just on hardware but on optimizing software to run more efficiently. As the cost of computing power rises, companies need to prioritize software optimization to stay competitive. This means that the race is no longer about who can build the most powerful hardware but who can write the most efficient software.

In the end, we’re not in an AI arms race, but an optimization race. The future of computing will depend on who can write better, more efficient software that maximizes the potential of the limited hardware available. The big question is: will the software industry rise to the challenge, or has it become too bloated to keep up?

Disclaimer: This article has been meticulously fact-checked by our team to ensure accuracy and uphold transparency. We strive to deliver trustworthy and dependable content to our readers.